Digital ways out of the Filter Bubble

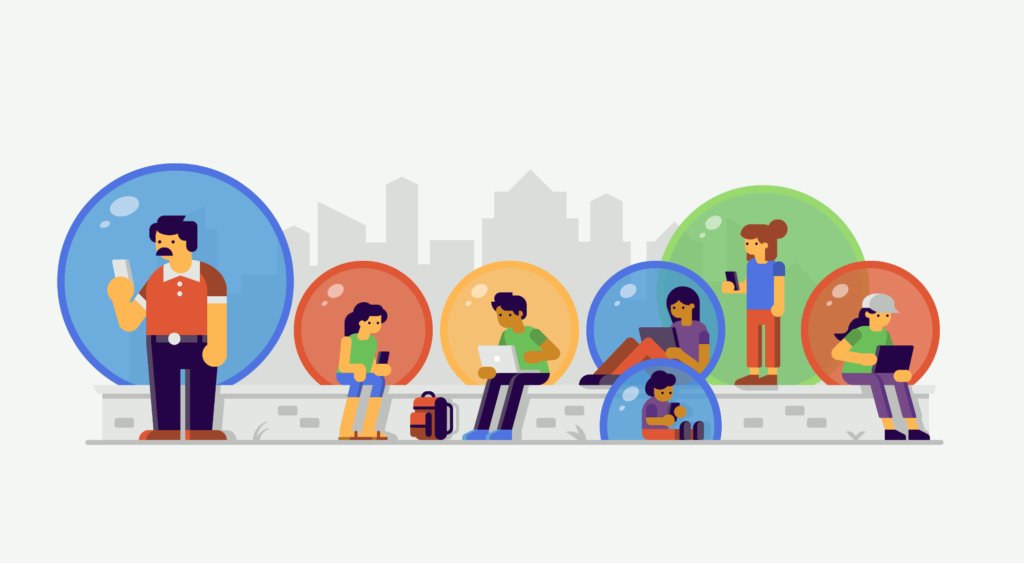

Algorithms from Google, Youtube & Co. clips or posts that must conform to our opinion. This is how opinion bubbles form. But it can be avoided. If you search for something on Google, you will see results that match your profile. Objective preferences, previous searches and browsing behavior are taken into account. Tiktok, Twitter or Youtube do this in a very similar way.

Always the same Opinions Promote Intolerance

Facebook measures users’ browsing behavior more accurately. This means that the websites I connect to and view are analyzed using sophisticated procedures. From this, my thematic and, ultimately, ideological preferences are calculated.

This is dangerous. Because if a person only receives the same opinions and information, then he no longer accepts different opinions. However, open societies thrive on the discussion of moot points. And the problem goes further: not only are dissenting positions no longer recognized, but other opinions are dramatically at odds – to the point of inciting hatred.

Trump won the Election with Opinion Bubbles

Donald Trump’s campaign for the 2016 US presidential election was built precisely on these filter bubbles. Specially developed advertising algorithms sent individual messages to potential Trump voters.

If Facebook’s data-driven online profile, for example, reveals that a voter doesn’t like Muslims but isn’t sure Trump will take tough enough action against Muslims, they get meticulously detailed text explaining Trump’s policies against Muslims. Muslims, who correspond to this. Exactly the idea of this voter.

People in filter Blisters are Vulnerable to Manipulation

A voter who wanted to revoke Obama’s sponsorship received emails and posts on their timeline. They assured him that Trump would withdraw Obama’s care provisions that he disliked. Anyone fearing for their job as a metallurgist or employee in the mining industry got an announcement from Donald Trump on their smartphone that he would create 25 million jobs.

It doesn’t matter if these campaign promises are realistic or if they lie to the sky. It’s about promising voters exactly what they expect. Those living in a filtering bubble are vulnerable to such manipulation.

Self-Reinforcing effects Ensure Profit

That’s exactly how Google, Facebook and company win. their money. On the other hand, with direct marketing campaigns, because it’s easy to influence people who are in the filter bubbles. On the other hand, because the self-promotion effect keeps users on your site longer, i.e. it ensures that more ads are sold.

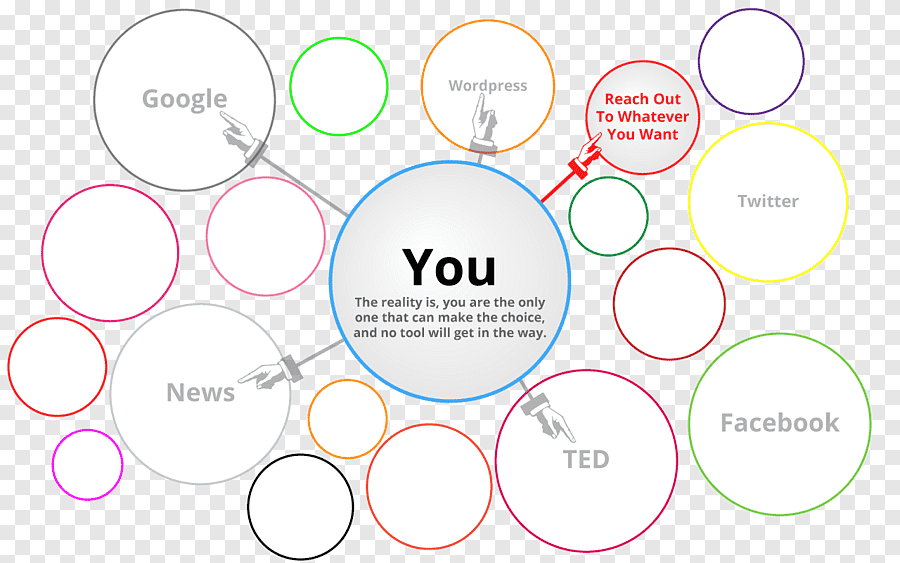

And traffic is increasing because Internet users have become addicted to this self-reinforcing effect. However, Twitter or Google users, for example, can do something about it:

First, switch to alternative social media platforms and use alternative search engines that don’t spy on users and create an opinion bubble.

Second, the algorithms of a search engine like Google or a social media platform like Twitter can sometimes be tricked. If you do, the bubble of opinion that algorithms build will immediately burst.

Replacement Bubble filter Pads

On Twitter, for example, the algorithm calculated opinion preference based on tweets and links clicked, as well as followers. If the user now consciously follows people with a very different opinion preference and reads their tweets, the algorithm looks at that and re-evaluates the preference.

Therefore, posts are shown to the user after the previous balloon. Algorithms on Facebook, Google and other platforms could be similarly affected.